1. Latent Semantic Analysis

Latent semantic analysis (LSA) is a natural language processing (NLP) technique to bridge terms and documents through concepts. The idea is that there are hidden concepts (latent concepts) through which words and documents are related. The heart and soul of LSA is the application of singular value decomposition (SVD) to a term-document matrix. In this tutorial, we will see how SVD is applied to documents and terms in those documents to flush out the latent concepts.

1.1. Data

Assume we have 6 documents labelled, d1, d2, d3, d4, d5, and d6. The first three documents, d1, d2, and d3 are about computer programming, and the last three documents are about financial investment. Assume each of these documents have titles, and the keywords in these titles span the terms

programming

development

language

money

finance

currency

We can build a term-document matrix of these terms and documents where the each row correspond to one of the unique terms, and the columns correspond to one of the documents. The term-document matrix is of dimensions, m x n = 6 x 6, corresponding to 6 terms (m rows) and 6 documents (n columns).

The dataframe, df, below, builds this term-document matrix; notice that the columns are labelled d1, d2, …, d6, and the rows are indexed from programming, development, …, currency. This term-document matrix is essentially a boolean matrix where the i-th, j-th entry is 1 if the i-th term shows up in the j-th document, otherwise, it is zero. Of course, you can create a different term-document matrix based on word frequency or something else like TF-IDF. Either way, the concepts and methods still apply to the term-document matrix. Furthermore, we convert df to A (convert the dataframe to a matrix); meaning, df = A.

Notice that we have a query document, q, for which we want to find similar documents. Later, we will transform the documents into data points in latent space, as well as q, and perform a search in latent space to find documents that are similar to q conceptually.

[1]:

import pandas as pd

import numpy as np

np.random.seed(37)

np.set_printoptions(precision=2)

df = pd.DataFrame(

data={

'd1': np.array([1, 1, 0, 0, 0, 0], dtype=np.float),

'd2': np.array([1, 1, 1, 0, 0, 0], dtype=np.float),

'd3': np.array([1, 0, 1, 0, 0, 0], dtype=np.float),

'd4': np.array([0, 0, 0, 1, 1, 0], dtype=np.float),

'd5': np.array([0, 0, 0, 1, 1, 1], dtype=np.float),

'd6': np.array([0, 0, 0, 1, 0, 1], dtype=np.float)

},

index=['programming', 'development', 'language', 'money', 'finance', 'currency'])

A = df.values

q = np.array([1, 0, 0, 0, 0, 0], dtype=np.float).reshape(-1, 1)

[2]:

print('A', A.shape)

A

A (6, 6)

[2]:

array([[1., 1., 1., 0., 0., 0.],

[1., 1., 0., 0., 0., 0.],

[0., 1., 1., 0., 0., 0.],

[0., 0., 0., 1., 1., 1.],

[0., 0., 0., 1., 1., 0.],

[0., 0., 0., 0., 1., 1.]])

[3]:

print('q', q.shape)

q

q (6, 1)

[3]:

array([[1.],

[0.],

[0.],

[0.],

[0.],

[0.]])

1.2. Singular value decomposition

We apply SVD to A, such that \(A = USV'\). Note the following.

\(U\) holds the coordinates of the individual terms.

\(S\) represents the latent concepts.

\(V\) holds the coordinates of the individual documents.

\(VT\), or, equivalently, \(V'\), is \(V\) transposed.

Note that \(S\) stores the eigenvalues, \(U\) stores the left-eigenvectors and \(V'\) stores the right-eigenvectors.

[4]:

from numpy.linalg import svd

U, S, VT = svd(A, full_matrices=False)

S = np.diag(S)

V = VT.transpose()

[5]:

print('U', U.shape)

U

U (6, 6)

[5]:

array([[-7.07e-01, 0.00e+00, -7.91e-17, 0.00e+00, -7.07e-01, 0.00e+00],

[-5.00e-01, 0.00e+00, -7.07e-01, 0.00e+00, 5.00e-01, 0.00e+00],

[-5.00e-01, 0.00e+00, 7.07e-01, 0.00e+00, 5.00e-01, 0.00e+00],

[ 0.00e+00, -7.07e-01, 0.00e+00, -7.91e-17, 0.00e+00, -7.07e-01],

[ 0.00e+00, -5.00e-01, 0.00e+00, -7.07e-01, 0.00e+00, 5.00e-01],

[ 0.00e+00, -5.00e-01, 0.00e+00, 7.07e-01, 0.00e+00, 5.00e-01]])

[6]:

print('S', S.shape)

S

S (6, 6)

[6]:

array([[2.41, 0. , 0. , 0. , 0. , 0. ],

[0. , 2.41, 0. , 0. , 0. , 0. ],

[0. , 0. , 1. , 0. , 0. , 0. ],

[0. , 0. , 0. , 1. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0.41, 0. ],

[0. , 0. , 0. , 0. , 0. , 0.41]])

[7]:

print('V', V.shape)

V

V (6, 6)

[7]:

array([[-5.00e-01, 0.00e+00, -7.07e-01, 0.00e+00, -5.00e-01, -0.00e+00],

[-7.07e-01, 0.00e+00, 3.08e-16, 0.00e+00, 7.07e-01, -0.00e+00],

[-5.00e-01, 0.00e+00, 7.07e-01, 0.00e+00, -5.00e-01, -0.00e+00],

[ 0.00e+00, -5.00e-01, 0.00e+00, -7.07e-01, -0.00e+00, -5.00e-01],

[ 0.00e+00, -7.07e-01, 0.00e+00, 3.08e-16, 0.00e+00, 7.07e-01],

[ 0.00e+00, -5.00e-01, 0.00e+00, 7.07e-01, 0.00e+00, -5.00e-01]])

[8]:

print('VT', VT.shape)

VT

VT (6, 6)

[8]:

array([[-5.00e-01, -7.07e-01, -5.00e-01, 0.00e+00, 0.00e+00, 0.00e+00],

[ 0.00e+00, 0.00e+00, 0.00e+00, -5.00e-01, -7.07e-01, -5.00e-01],

[-7.07e-01, 3.08e-16, 7.07e-01, 0.00e+00, 0.00e+00, 0.00e+00],

[ 0.00e+00, 0.00e+00, 0.00e+00, -7.07e-01, 3.08e-16, 7.07e-01],

[-5.00e-01, 7.07e-01, -5.00e-01, -0.00e+00, 0.00e+00, 0.00e+00],

[-0.00e+00, -0.00e+00, -0.00e+00, -5.00e-01, 7.07e-01, -5.00e-01]])

1.3. Choose k << n

We now need to choose a k that is way less than n (the number of terms), \(k << n\). Typically, you could use a few of the techniques below for starters.

Kaiser criterion

Scree plot

Proportion of variance explained

In our case, we arbitrarily choose k=3.

[9]:

from numpy.linalg import inv

k = 3

U_k = U[:, 0:k]

S_k = inv(S[0:k, 0:k])

V_k = V[:, 0:k]

VT_k = VT[0:k, :]

[10]:

print('U_k', U_k.shape)

U_k

U_k (6, 3)

[10]:

array([[-7.07e-01, 0.00e+00, -7.91e-17],

[-5.00e-01, 0.00e+00, -7.07e-01],

[-5.00e-01, 0.00e+00, 7.07e-01],

[ 0.00e+00, -7.07e-01, 0.00e+00],

[ 0.00e+00, -5.00e-01, 0.00e+00],

[ 0.00e+00, -5.00e-01, 0.00e+00]])

[11]:

print('S_k^-1', S_k.shape)

S_k

S_k^-1 (3, 3)

[11]:

array([[0.41, 0. , 0. ],

[0. , 0.41, 0. ],

[0. , 0. , 1. ]])

[12]:

print('V_k', V_k.shape)

V_k

V_k (6, 3)

[12]:

array([[-5.00e-01, 0.00e+00, -7.07e-01],

[-7.07e-01, 0.00e+00, 3.08e-16],

[-5.00e-01, 0.00e+00, 7.07e-01],

[ 0.00e+00, -5.00e-01, 0.00e+00],

[ 0.00e+00, -7.07e-01, 0.00e+00],

[ 0.00e+00, -5.00e-01, 0.00e+00]])

[13]:

print('VT_k', VT_k.shape)

VT_k

VT_k (3, 6)

[13]:

array([[-5.00e-01, -7.07e-01, -5.00e-01, 0.00e+00, 0.00e+00, 0.00e+00],

[ 0.00e+00, 0.00e+00, 0.00e+00, -5.00e-01, -7.07e-01, -5.00e-01],

[-7.07e-01, 3.08e-16, 7.07e-01, 0.00e+00, 0.00e+00, 0.00e+00]])

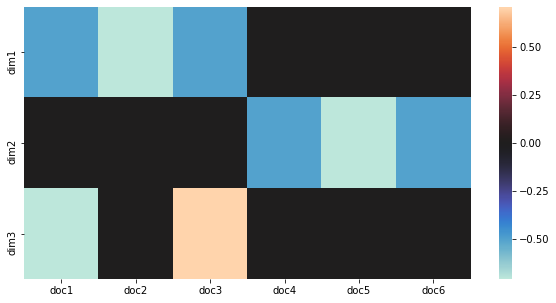

1.4. Visualize concepts to documents

Here, we plot how the concepts (dimensions in latent space) relate to each document. Note that dimension 1 relates to documents 1, 2, and 3, and dimension 2 relates to documents 4, 5, 6.

[14]:

%matplotlib inline

import matplotlib.pylab as plt

import seaborn as sns

fig, ax = plt.subplots(figsize=(10, 5))

x_labels = ['doc{}'.format(i+1) for i in range(VT_k.shape[1])]

y_labels = ['dim{}'.format(i+1) for i in range(VT_k.shape[0])]

sns.heatmap(VT_k, xticklabels=x_labels, yticklabels=y_labels, center=0.0, ax=ax)

plt.show()

1.5. Cluster in latent space

We can also cluster the documents in latent space. Note that we already know there are 2 clusters, so we use k-means to cluster and specify 2 clusters.

[15]:

from sklearn.cluster import KMeans

kmeans = KMeans(n_clusters=2).fit(V_k)

print(kmeans.labels_)

print(kmeans.cluster_centers_)

[1 1 1 0 0 0]

[[ 0.00e+00 -5.69e-01 0.00e+00]

[-5.69e-01 0.00e+00 5.55e-17]]

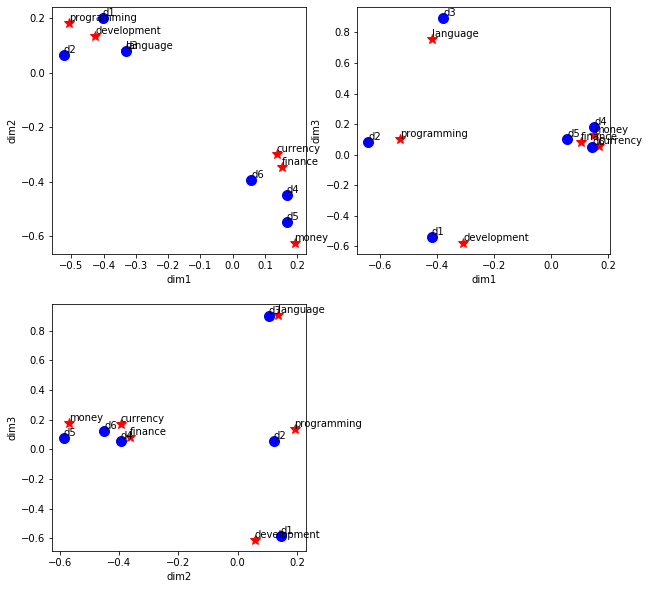

1.6. Visualize terms and documents

Here we plot the terms and documents in the latent space. Since we have selected 3 dimensions in latent space, we will plot the terms and documents with dimension 1 vs dimension 2, dimension 1 vs dimension 3, and dimension 2 vs dimension 3. Note that in the plot of dimension 1 vs dimension 2, all the programming books cluster together with the programming keywords, and all the financial books cluster together with the financial keywords.

[16]:

from scipy.special import binom

import math

def plot_terms_docs(x_index, y_index, U, V, df, ax):

def get_jitter():

return np.random.choice(np.linspace(0.05, 0.2, 20), 1)

for i in range(len(df.index)):

term = df.index[i]

x = U[i][x_index] + get_jitter()

y = U[i][y_index] + get_jitter()

ax.plot(x, y, color='red', marker='*', markersize=10.0)

ax.annotate(term, xy=(x, y),

textcoords='data',

horizontalalignment='left',

verticalalignment='bottom')

for i in range(V.shape[0]):

doc = 'd{}'.format(i + 1)

x = V[i][x_index] + get_jitter()

y = V[i][y_index] + get_jitter()

ax.plot(x, y, color='blue', marker='o', markersize=10.0)

ax.annotate(doc, xy=(x, y),

textcoords='data',

horizontalalignment='left',

verticalalignment='bottom')

n = U_k.shape[1]

num_cols = 2

num_rows = 1

num_combinations = int(binom(n, num_cols))

is_odd = False

if num_combinations % num_cols == 0:

num_rows = num_combinations / num_cols

else:

num_rows = math.floor(num_combinations / num_cols + 1)

is_odd = True

fig, axes = plt.subplots(num_rows, num_cols,

sharex=False, sharey=False,

figsize=(10, 10))

if is_odd is True:

axes[-1, -1].axis('off')

axes = axes.ravel()

ax_counter = 0

for i in range(n):

for j in range(n):

if j > i:

ax = axes[ax_counter]

plot_terms_docs(i, j, U_k, V_k, df, ax)

ax.set_xlabel('dim{}'.format(i + 1))

ax.set_ylabel('dim{}'.format(j + 1))

ax_counter += 1

1.7. Query for similar documents

This example shows how to convert a new document into latent space and then query for similar documents. Note that the new document has the term “programming”, thus, its cosine-similarity is closer to the programming documents than the financial ones.

[17]:

r = q.transpose().dot(U_k).dot(S_k)

print('q in latent space', r.shape)

r

q in latent space (1, 3)

[17]:

array([[-2.93e-01, 0.00e+00, -7.91e-17]])

[18]:

from sklearn.metrics.pairwise import cosine_similarity

r = cosine_similarity(q.transpose().dot(U_k).dot(S_k), V_k)

print('q similarities to docs in latent space', r.shape)

r

q similarities to docs in latent space (1, 6)

[18]:

array([[0.58, 1. , 0.58, 0. , 0. , 0. ]])