1. Artificial Neural Network

Let’s make our own artificial neural network (ANN) or neural network (NN)! This NN will have 1 input layer, 1 hidden layer, and 1 output layer. Each of these layers will have 2 nodes. The purpose of this NN will be to classify data points coming from two different distributions. This problem is the classic binary classification problem.

1.1. Synthetic data

There will be 2 classes of data points. For this purpose, we will sample data points from 2 different multivariate distributions as follows.

\(y_1 \sim \mathcal{N}(\mu_1, \Sigma_1)\)

\(y_2 \sim \mathcal{N}(\mu_2, \Sigma_2)\)

where

\(\mu_1 = [0, 0]\)

\(\mu_2 = [5, 5]\)

\(\Sigma_1 = \begin{bmatrix} 1 & 0.75\\ 0.75 & 1 \end{bmatrix}\)

\(\Sigma_2 = \begin{bmatrix} 1 & 0.75\\ 0.75 & 1 \end{bmatrix}\)

If we sample from \(y_1\) then all labels will be 0, and if we sample from \(y_2\) then all labels will be 1.

[1]:

import numpy as np

from scipy.stats import multivariate_normal

np.random.seed(104729)

n = 1000

n_samples = int(n / 2)

m_1 = np.array([0.0, 0.0], dtype=np.float)

m_2 = np.array([5.0, 5.0], dtype=np.float)

cov_1 = np.array([

[1.0, 0.75],

[0.75, 1.0]],

dtype=np.float)

cov_2 = np.array([

[1.0, 0.75],

[0.75, 1.0]],

dtype=np.float)

X1 = multivariate_normal.rvs(mean=m_1, cov=cov_1, size=n_samples)

X2 = multivariate_normal.rvs(mean=m_2, cov=cov_2, size=n_samples)

y_1 = np.zeros(n_samples).reshape(n_samples, 1)

y_2 = np.ones(n_samples).reshape(n_samples, 1)

X = np.vstack([X1, X2])

y = np.vstack([y_1, y_2])

print('X shape is {}'.format(X.shape))

print('y shape is {}'.format(y.shape))

X shape is (1000, 2)

y shape is (1000, 1)

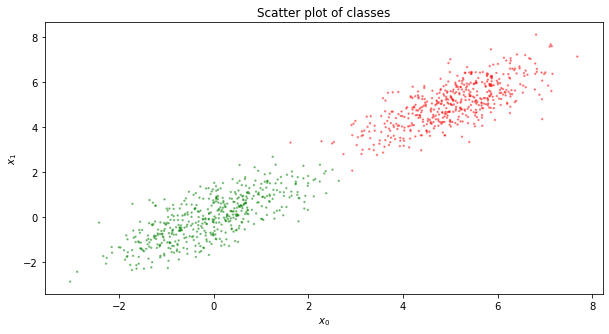

1.2. Visualize

Now, let’s plot our sample points. Red dots are our positive examples (our 1’s), and green dots are our negative examples (our 0’s). Clearly, our positive examples center around \([5, 5]\) and our negative examples center around \([0, 0]\) as we sampled our examples from the multivariate Gaussian at these means.

[2]:

%matplotlib inline

import matplotlib.pyplot as plt

fig, ax = plt.subplots(1, 1, figsize=(10, 5), sharex=False, sharey=False)

colors = ['r' if 1 == v else 'g' for v in y]

ax.scatter(X[:, 0], X[:, 1], c=colors, alpha=0.4, s=2)

ax.set_title('Scatter plot of classes')

ax.set_xlabel(r'$x_0$')

ax.set_ylabel(r'$x_1$')

[2]:

Text(0, 0.5, '$x_1$')

1.3. ANN architecture

Again, our NN has 3 layers, each layer having 2 nodes, and they are defined as follows.

Layer 1: input layer

Layer 2: hidden layer

Layer 3: output layer

These layers are fully connected, meaning, all nodes in the current layer connect to all other nodes in the next layer. For the

input to hidden layer, since there are 2 nodes in each of these layers, there is a total of \(2 \times 2 = 4\) connections, and

hidden to output layer, since there are 2 nodes in each of these layers also, there is a total of \(2 \times 2 = 4\) connections.

The way practioners represent these inter-layer connections is through matrices. There will always be \(K - 1\) matrices to represent a NN, where \(K\) is the number of layers. In our example, we will have a matrix that looks like the following.

\(W_{ih} = \begin{bmatrix} w_{11} && w_{21}\\ w_{12} && w_{22} \end{bmatrix}\)

where

\(W_{ih}\) is the connection matrix between the input to hidden layer, and

\(w_{jk}\) in the connection matrix is the

weightof the connection from thej-thinput node to thek-thhidden node.

For clarity, here are the weights defined.

\(w_{11}\) is the weight of the connection from the

firstinput node to thefirsthidden node,\(w_{12}\) is the weight of the connection from the

firstinput node to thesecondhidden node,\(w_{21}\) is the weight of the connection from the

secondinput node to thefirsthidden node,\(w_{22}\) is the weight of the connection from the

secondinput node to thesecondhidden node

While we are defining connection (or weight) matrices, the connection matrix to represent the hidden to output layer is denoted as follows.

\(W_{ho} = \begin{bmatrix} w_{11} && w_{21}\\ w_{12} && w_{22} \end{bmatrix}\)

Note how we re-used the \(w_{jk}\) notation in this \(W_{oh}\) matrix? Yes, this reuse of notation will be confusing, to say the least, but it should be clear from the context that these are new weights for the new matrix \(W_{oh}\). A lot of math and statistics, and artificial intelligence and machine learning, is dense (difficult to understand) because of all the notations (subscripts and superscripts will confuse us). Maybe I should have used a different symbol like

\(\theta_{jk}\) to represent these new and different weights inside of \(W_{oh}\), but, as you will see later, keeping the \(w_{jk}\) generalizes the forward and backward propagations between layers (catch-22 problem, because we need to understand downstream concepts to understand upstream notation quirks).

Ok, so how do we assign values to these weight matrices? It is suggested that we sample for weight values from a normal distribution as follows.

\(w_{jk} \sim \mathcal{N}(0, \eta)\)

where,

\(\eta = \frac{1}{\sqrt{m}}\) is the standard deviation, and

\(m\) is the number of incoming links to the k node.

We do not want weights that are zero or too big, which could lead to learning problems in the NN.

weights with a value of 0 will destroy the signals

weights that are very large will lead to saturation problem

Due to our NN architecture (each layer has 2 nodes, and the layers are fully connected), we will have \(\sigma = \frac{1}{\sqrt{2}} = 0.70\). Thus, we will sample random initial weights according to the following.

\(w_{jk} \sim \mathcal{N}(0, 0.70)\)

1.4. Foward and backward propagations

Great, so now we have matrices to represent our NN. How do we use it to learn how to classify our data points \(X, y\)? The idea is with the forward and backward propagations (note that backward propagation is also termed backpropagation).

1.4.1. Forward propagation

The forward propagation passes the inputs from input layer to the next all the way to the output layer. This forward propagation is just simply matrix multiplication or dot product between the weight matrix (of the current and previous layer) and outputs of the previous layer. Given the input and weight matrices, this forward propagation ultimately makes a prediction (or classification) at the output layer. For any two connected layers, the dot product between the corresponding weight matrix and outputs of the previous layer looks like the following.

\(Wx = \begin{bmatrix} w_{11} && w_{21}\\ w_{12} && w_{22} \end{bmatrix} \begin{bmatrix} x_1 \\ x_2 \end{bmatrix}\)

There is one caveat with the forward propagation, and that is \(Wx\) is not used directly as the output to feed into the next layer. Typically, something like a sigmoid function is applied to the vector resulting from \(Wx\). The sigmoid function looks very simple.

\(\sigma(x) = \frac{1}{1 + e^{-x}}\)

With the exception of using the raw inputs from the input layer to the hidden layer, the output of the current layer to the next looks like the following.

\(\hat{y} = \sigma(Wx)\)

The sigmoid function is termed the activation function, and there are many types such as tanh and ReLU.

1.4.2. Backward propagation

The backward propagation is more complex and passes information backwards from the output layer all the way back to the input layer to adjust the weights inside the weight matrices. The idea of the backward propagation is that if we have a prediction, and those predictions are made with our weight matrices, we need to adjust those weights by increasing or decreasing them. Well, how do we adjust those weights up or down and by how much (direction and magnitude)? Since we start backpropagation at the output layer, we can compute the error between what we expected and what the NN predicted. That error is often computed and formalized by a loss function, and there are many of these loss functions defined for prediction and classification problems. In a lot of learning problems, a loss function is denoted as follows.

\(L(y, \hat{y}) = (y - \hat{y})^2\)

Note that we do not define the loss function as follows.

\(L(y, \hat{y}) = y - \hat{y}\), since summing over all losses may result in zero which will mislead us into believing that there is no error

\(L(y, \hat{y}) = |y - \hat{y}|\), since the function is not continuous at the minimum

Ok, so what is it about this loss function that can help us adjust the weights in direction and magnitude? If we take the derivative (or partial derivatives for the multivariate case) of the loss function, the result actually gives us the direction to move the weights towards (to the left, negative, or to the right, positive). It also gives us the magnitude as well (how much to the left, how much to the right). The derivative of the loss function is referred to as the gradient and denoted as follows.

\(\nabla L(y, \hat{y}) = \frac{\partial L}{\partial \hat{y}} = -2(y - \hat{y})\)

If \(\hat{y}\) is to the right of \(y\), then we have predicted too high, and should move to the left. If \(\hat{y}\) is the the left of \(y\), then we have predicted too low, and should move to the right. This loss function coupled with the gradient descent approach, can help us find the optimal weights to minimize the loss (or error). Typically, the way to update the current weight is as follows.

\(w_{jk}^{*} = w_{jk} - \alpha \nabla L\)

where

\(w_{jk}^{*}\) is the new weight

\(w_{jk}\) is the current weight

\(\alpha\) is the learning weight

\(\nabla L\) is the gradient of the loss function

Now, something about the learning rate \(\alpha\), why do we need it? Notice that all \(\alpha\) does is

reduces \(\nabla L\) if \(\alpha \in [0, 1)\),

preserves \(\nabla L\) if \(\alpha = 1\), or

increases \(\nabla L\) if \(\alpha \in (1, \inf)\).

If \(\nabla L\) already tells us direction and magnitude to move, why do we need \(\alpha\)? At a high level, though \(\nabla L\) tells us direction and magnitude, if we blindly follow its suggestion, we may not find the (locally) optimal minimum of the loss function (we may overshoot or not converge at all). Additionally, when learning the weights in a network using gradient descent, without \(\alpha\), the decision boundary will not take into consideration all previously seen examples and be pushed only with respect to the latest example; \(\alpha\) allows learning contributions from all previously seen examples. Here’s some more information on the learning rate, but, just be aware, that, generally speaking, any learning algorithm using gradient descent is very sensitive to \(\alpha\). There are many papers and techniques devoted only to \(\alpha\), all you need is to search the web.

1.4.2.1. Adjusting the weights

Assume our loss function is simply as stated before.

\(L(y, \hat{y}) = (y - \hat{y})^2\)

For every weight, \(w_{jk}\), we wish to define the gradient so that we may adjust our weights appropriately as mentioned before \(w_{jk}^{*} = w_{jk} - \alpha \nabla L\). The gradient of \(L\) with respect to the weight \(w_{jk}\) is written as follows.

\(\nabla L_{jk} = \frac{\partial L}{\partial w_{jk}}\)

Now, \(\frac{\partial L}{\partial w_{jk}}\) itself can be broken down or decomposed into three terms using the chain rule.

\(\frac{\partial L}{\partial w_{jk}} = \frac{\partial L}{\partial \hat{y}_k} \frac{\partial \hat{y}_k}{\partial x_j} \frac{\partial x_j}{\partial w_{jk}}\)

where

\(\frac{\partial L}{\partial \hat{y}_k}\) is the change of \(L\) over the output \(\hat{y}_k\),

\(\frac{\partial \hat{y}_k}{\partial x_j}\) is the change of the output \(\hat{y}_k\) over the input \(x_j\), and

\(\frac{\partial x_j}{\partial w_{jk}}\) is the change of the input \(x_j\) over the weight \(w_{jk}\).

If we can compute this quantity, then we know how to precisely update the weights in the matrices. In our NN example, we start backpropagation from the output layer to the hidden layer and then the hidden to the input layer, using this simple formula. That is backpropagation in a NN and that is how we update the weights. This gradient of the loss function is the learning in NN and also deep learning NN architecture. At a high level, in forward propagation, we have made a prediction with

the input and current weights; in backward propagation, there are errors in the prediction, and so we use the errors to adjust the weights via gradients and the learning rate.

1.4.2.2. So, what does \(\frac{\partial L}{\partial \hat{y}_k}\) look like?

Very simply, it looks like the following.

\(\frac{\partial L}{\partial \hat{y}_k} = \frac{\partial}{\partial \hat{y}_k} (y - \hat{y})^2 = -2(y - \hat{y})\)

1.4.2.3. So, what does \(\frac{\partial \hat{y}_k}{\partial x_j}\) look like?

If you recall, \(\hat{y} = \sigma(w'x) = \frac{1}{1 + e^{-w'x}}\). But, let’s just focus on \(\sigma(z)\) for a second without needing to worry about \(w'x\) or the crazy fraction and exponentiation. We know that the derivative of \(\sigma(z)\) is as follows.

\(\nabla \sigma(z) = \frac{\partial}{\partial z} \sigma(z) = \sigma(z) (1 - \sigma(z))\)

Huh, so, if we substitute \(z = w'x\) back in, then we get the following.

\(\frac{\partial \hat{y}_k}{\partial x_j} = \frac{\partial}{\partial x_j} \sigma(w'x) = \sigma(w'x) (1 - \sigma(w'x))\)

1.4.2.4. So, what does \(\frac{\partial x_j}{\partial w_{jk}}\) look like?

Remember \(\hat{y} = \sigma(w'x)\)? When we differentiated, \(\frac{\partial}{\partial x_k} \sigma(w'x)\), we had the expression inside the \(\sigma(z)\) that also needed to be differentiated with respect to \(w_{jk}\). In general, when \(f(z) = w_1 x_1 + w_2 x_2 + \ldots + w_n x_n\), then

\(\frac{\partial}{\partial w_j} f(z) = \frac{\partial}{\partial w_j} w_1 x_1 + w_2 x_2 + \ldots + w_n x_n = x_j\)

Thus,

\(\frac{\partial x_j}{\partial w_{jk}} = x_j\)

1.4.2.5. Putting it all together to adjust the weights

Thus, putting it all together, we have something that looks like the following (note we ultimately drop the constant term, 2).

\(\nabla L_{jk} = \frac{\partial L}{\partial w_{jk}} = \frac{\partial L}{\partial \hat{y}_k} \frac{\partial \hat{y}_k}{\partial x_j} \frac{\partial x_j}{\partial w_{jk}} = -2(y - \hat{y}) \sigma(w'x) (1 - \sigma(w'x)) x_j \approx -(y - \hat{y}) \sigma(w'x) (1 - \sigma(w'x)) x_j\)

The value of this computation is a scalar (a single value), that we can plug back into \(w_{jk}^{*} = w_{jk} - \alpha \nabla L_{jk}\).

That’s it. Backpropagation in NN.

1.5. Learning example

1.5.1. Initialize the weights

Here, we show how to do the forward and backward propagations. First, we must initialize the weight matrices \(W_{ih}\) and \(W_{ho}\).

[3]:

from scipy.stats import norm

loc = 0.0

scale = 1 / np.sqrt(2)

W_ih = np.random.normal(loc=loc, scale=scale, size=(2, 2))

W_ho = np.random.normal(loc=loc, scale=scale, size=(2, 2))

1.5.2. Training: foward and backward propagations

Now, we can feed our training data one at a time to the NN. For each training example, we will do a forward and backward propagation. Also, note that we randomly select a sample for learning. By feeding one example at a time randomly to the NN, this approach is called stochastic gradient descent. In general, there are 3 ways to do gradient descent.

Batch gradient descent is when you send all the examples through forward propagation, average the loss and then backprogate.

Stochastic gradient descent is when you send one random example at a time through forward propagation, and then backprogate the error.

Mini-batch gradient descent is when you send a few number of examples through (the number is a parameter that is specified by you and is called the batch size), average the loss and then backpropagate. Obviously, this approach combines batch and stochastic gradient descents.

Each one of these approaches have their own advantages and disadvantages, though, it is claimed that mini-batch gradient descent is the most common one. Commonly, in batch gradient descent, several passes over the entire dataset is conducted, and each pass is termed and epoch. Stochastic gradient descent is entirely appropriate for online learning (or streaming data) since as new data come through, you can train the NN by feeding these new examples one by one into the NN. These are not the only approaches to gradient descent, a whole area of research is devoted to gradient descent algorithms.

[4]:

from scipy.special import expit

lr = 0.1

positive = np.array([0.01, 0.99], dtype=np.float, ndmin=2).T

negative = np.array([0.99, 0.01], dtype=np.float, ndmin=2).T

for row in range(X.shape[0]):

r = np.random.randint(X.shape[0])

inputs = np.array(X[r, :], ndmin=2).T

targets = positive if y[r, 0] == 1.0 else negative

# forward propagation

hidden_inputs = W_ih.dot(inputs)

hidden_outputs = expit(hidden_inputs)

final_inputs = W_ho.dot(hidden_outputs)

final_outputs = expit(final_inputs)

# compute errors

output_errors = targets - final_outputs

hidden_errors = W_ho.T.dot(output_errors)

# backward propagation

delta_ho = np.dot(output_errors * final_outputs * (1.0 - final_outputs), np.transpose(hidden_outputs))

delta_ih = np.dot(hidden_errors * hidden_outputs * (1.0 - hidden_outputs), np.transpose(inputs))

W_ho += lr * delta_ho

W_ih += lr * delta_ih

1.5.3. Query the model with a negative example

Now, we query the model with the first training sample, which is a negative (zero, 0) example. As you can see, the NN classifies this example more as a negative example.

[5]:

r = 0

inputs = X[r, :].T

hidden_inputs = W_ih.dot(inputs)

hidden_outputs = expit(hidden_inputs)

final_inputs = W_ho.dot(hidden_outputs)

final_outputs = expit(final_inputs)

print('{}: x={}, y={} ---> {}'.format(r, X[r, :], y[r, 0], final_outputs))

0: x=[-0.22117921 0.0354449 ], y=0.0 ---> [0.72796373 0.35839938]

1.5.4. Query the model with a positive example

We also query the model with the last training sample, which is a positive (one, 1) example. As you can see, the NN classfies this example more as a positive example.

[6]:

r = n-1

inputs = X[r, :].T

hidden_inputs = W_ih.dot(inputs)

hidden_outputs = expit(hidden_inputs)

final_inputs = W_ho.dot(hidden_outputs)

final_outputs = expit(final_inputs)

print('{}: x={}, y={} ---> {}'.format(r, X[r, :], y[r, 0], final_outputs))

999: x=[4.23706484 4.85350827], y=1.0 ---> [0.22979532 0.73710209]

1.5.5. Predict on all the data

Here, we make predictions on all the samples and visualize the results of the predictions.

[7]:

import pandas as pd

def query_predictions():

data = []

for r in range(n):

inputs = X[r, :].T

hidden_inputs = W_ih.dot(inputs)

hidden_outputs = expit(hidden_inputs)

final_inputs = W_ho.dot(hidden_outputs)

final_outputs = expit(final_inputs)

x1 = X[r, 0]

x2 = X[r, 1]

o_0 = final_outputs[0]

o_1 = final_outputs[1]

y_true = y[r, 0]

y_pred = 1.0 if o_1 > o_0 else 0.0

is_correct = 1 if y_true == y_pred else 0

t = x1, x2, y_true, y_pred, is_correct, o_0, o_1

data.append(t)

return pd.DataFrame(data, columns=['x1', 'x2', 'y_true', 'y_pred', 'is_correct', 'output_0', 'output_1'])

predictions_df = query_predictions()

print('number of correct predictions {}'.format(predictions_df['is_correct'].sum()))

number of correct predictions 894

As you can see, the number of correct predictions on the training data is about 89%.

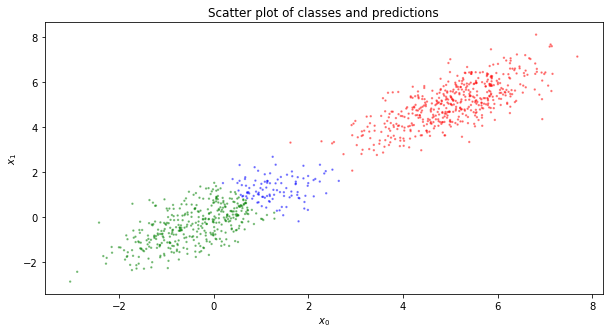

1.5.6. Visualize the predicted results

Now, visualize the results of the predictions. The color coding is as follows.

If the example is negative and the prediction is correct, then color the data green

If the example is negative and the prediction is incorrect, then color the data blue

If the example is positive and the prediction is correct, then color the data red

If the example is positive and the prediction is incorrect, then color the data magenta

We re-plot the original data with the predicted data.

[8]:

def get_color(r):

if r['y_true'] == 0.0 and r['is_correct'] == 1:

return 'g'

elif r['y_true'] == 0.0 and r['is_correct'] == 0:

return 'b'

elif r['y_true'] == 1.0 and r['is_correct'] == 1:

return 'r'

else:

return 'm'

fig, ax = plt.subplots(1, 1, figsize=(10, 5), sharex=False, sharey=False)

colors = ['r' if 1 == v else 'g' for v in y]

ax.scatter(X[:, 0], X[:, 1], c=colors, alpha=0.4, s=2)

ax.set_title('Scatter plot of classes')

ax.set_xlabel(r'$x_0$')

ax.set_ylabel(r'$x_1$')

fig, ax = plt.subplots(1, 1, figsize=(10, 5), sharex=False, sharey=False)

colors = [get_color(r) for i, r in predictions_df.iterrows()]

ax.scatter(predictions_df['x1'], predictions_df['x2'], c=colors, alpha=0.4, s=2)

ax.set_title('Scatter plot of classes and predictions')

ax.set_xlabel(r'$x_0$')

ax.set_ylabel(r'$x_1$')

[8]:

Text(0, 0.5, '$x_1$')

Note that the incorrect predictions are mostly, if not entirely, with the negative examples being incorrectly classified. Also, note that the misclassification of negative examples as positive ones are at the edge close to the center of the positive examples. The misclassifications are probably due to our lack of bias nodes in the layers; had we added bias nodes, perhaps the descision boundary could have been pushed to the right. Additionally, we did not do any batch normalization, and that certainly will help with new, unseen data coming through to be classified.